Table of Contents

Evolutionary Algorithms

For approximately 4 billion years, there has been life on earth. Apart from the amazing diversity of species, the process of evolution has created many organisms and forms that are well adapted to their respective environment, partly even in an optimal way. Why should one not try to come to new and more robust optimization procedures by mimicking fundamental evolutionary principles?

At the beginning of the 1960s, different researchers came up with this question independently of each other. In Germany, this has led to evolution strategies (ES), in the USA to genetic algorithms (GA) and the concept of evolutionary programming (EP). These procedures as well as genetic programming (GP), which transfers evolutionary principles into the search space of programming languages, are summarized today under the names evolutionary algorithms (EA) or evolutionary computation (EC). The different classes of evolutionary algorithms differ by the representation of the individuals and by their variation and selection operators. An important advantage of all evolutionary procedures is their inherent, scalable parallelism. Thus, EA can easily be adapted to any kind of parallel data processing architecture.

At the beginning of the 1960s, different researchers came up with this question independently of each other. In Germany, this has led to evolution strategies (ES), in the USA to genetic algorithms (GA) and the concept of evolutionary programming (EP). These procedures as well as genetic programming (GP), which transfers evolutionary principles into the search space of programming languages, are summarized today under the names evolutionary algorithms (EA) or evolutionary computation (EC). The different classes of evolutionary algorithms differ by the representation of the individuals and by their variation and selection operators. An important advantage of all evolutionary procedures is their inherent, scalable parallelism. Thus, EA can easily be adapted to any kind of parallel data processing architecture.

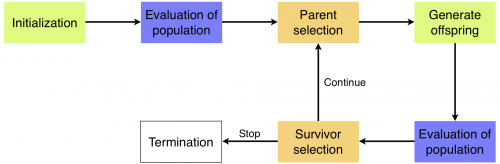

A number of individuals form a population, which evolves from one generation to the next by means of the following genetic operators: Mutation is a stochastic operator that modifies the genetic information of an individual after recombination has assembled the genetic material of two or more parents to a descendant. Selection then determines which individuals may reproduce in the next generation.

Evolution strategies

Although invented originally for experimental optimization and discrete search spaces, typically the real numbers are the search domain of evolution strategies since their implementation on computers. For this case, the theory of ES progressed furthest. Accordingly, an individual consists of an n-dimensional real valued vector x representing the object variables and a number of strategy parameters controlling mutation. The number of strategy parameters can vary between 1 and n + n(n - 1)/2 depending on the user's choice.

The special feature of ES is recombining and mutating both object and strategy parameters. This and an appropriate selection pressure provide the possibility of a permanent self-adaptation of mean step sizes, and sometimes even the preferred directions the strategy takes in the search space emerge. For the success of the self-adjustment, a surplus of descendants is crucial, which is diminished again by selection. Researchers in our group examine convergence properties, the influence of different operators, and extensions of the basic algorithm for multi-objective and dynamic optimization problems. Different parallel approaches are being investigated, too.

Genetic algorithms

Typically, in GA, bit strings represent an individual. This does not mean, however, that GA can only solve pseudo-Boolean problems, for which this coding is directly suitable. More complex data structures like real numbers, lists, trees etc. can be mapped to bit strings in an appropriate way. However, each additional mapping between the binary and the problem representation weakens the necessary causality between genotype and phenotype. For each optimization problem one has to decide whether to choose a representation tailored for the problem and to adapt the genetic operators, or using a standard GA by coding the decision variables more or less skillfully in a bit string.

Selection chooses, at least, two individuals from the population for the next mating with a probability proportional to their relative fitness. The descendant receives genes from both parents. Then, the descendant's bits are mutated (i.e. inverted) with a small probability. The individuals produced in this way replace the parental generation. This procedure is repeated until a termination criterion is fulfilled.

Genetic programming

Genetic programming (GP) designates a set of evolutionary processes that generate computer programs representing algorithms. These algorithms are meant to solve a specic problem. The problem solving capability (fitness) of such genotypes is given by its algorithm's capability of approximating a problem-specific input-output relation. GP processes can be used practically in many problem domains like data mining, control, robotics, or economics.

Depending on the representation of individuals, different variants of GP may be distinguished nowadays. In the traditional approach programs are represented as syntax trees of a functional programming language. Another established variant, linear GP, uses imperative program code instead, and includes the evolution of machine programs. In the AIMGP approach (Automatic Induction of Machine Code with Genetic Programming) programs are executed directly as binary machine code without passing an interpretation step first. By this, the time-critical step of genetic programming is accelerated significantly. Another possibility of reducing the execution time of programs is the removal of non-effective code before the fitness of a program is calculated. The existence of non-used parts of programs is typical for the linear representation, in particular. Other GP approaches use program graphs or combine different forms of representation within one individual. In this context especially branching graphs of linear instruction sequences (so-called linear graphs) may promise a better performance.